You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Large-scale investigation confirms TRPM3 ion channel dysfunction in ME/CFS, 2025, Marshall-Gradisnik et al

- Thread starter John Mac

- Start date

Do you think it is intentional? Or is it just difficult/unusual to avoid this, thus likely to be an accident.Like another study from this group that was posted here, it looks like the p-values are artificially low due to pseudoreplication. I'll just quote the last time I said it, since it's the same issue, just with the sample size changed:

It all sounded quite interesting!

Here's another resource explaining pseudoreplication, and they say it's likely usually researchers just being unaware it's an issue:Do you think it is intentional? Or is it just difficult/unusual to avoid this, thus likely to be an accident.

3 Independence & pseudoreplication

Pseudoreplication is unfortunately quite a big problem in biological and clinical research, probably because many people aren’t really aware of the issue or how to recognise whether they’re accidentally doing it in their analysis. Several review articles have investigated the incidence of pseudoreplication in published papers, and have estimated that as many as 50% of papers in various fields may suffer from this problem, including neuroscience, animal experiments and cell culture and primate research. In fields like ecology and conservation, the estimated figure is sometimes even higher.

RedFox

Senior Member (Voting Rights)

I don't get it. How could you do that accidentally? I'm not a scientist or a researcher, my training in statistics consists of one college course. However, it's intuitively obvious to me that if you test multiple samples per person, you need to correct for that somehow.

Kitty

Senior Member (Voting Rights)

my training in statistics consists of one college course

Is it possible some researchers haven't done a great deal more than that?

hotblack

Senior Member (Voting Rights)

Even if they know, the motivations to pump up your paper seem significant. The incentive structures for sticking to the facts in a measured way seem far less albeit more worthyIs it possible some researchers haven't done a great deal more than that?

jnmaciuch

Senior Member (Voting Rights)

yup, my program only requires one very basic stats course (I’m taking more advanced courses because I’m specializing in bioinformatics, everyone else is doing biology). I don’t know if the idea of pseudoreplication would even be brought up in that basic courseIs it possible some researchers haven't done a great deal more than that?

You’d hope that scientists absorb more stats knowledge from others during their training and career but if someone is surrounded by people who do the same thing it might just fall low on the list of things that they are motivated to question and verify for themselves

rvallee

Senior Member (Voting Rights)

Considering how statistics are central to all medical research, this is really odd. Also it explains a lot. When we look at evidence-based medicine and how they abuse statistics, it might explain everything. They use the tools because they're told to use them, but don't really understand why they should use them.yup, my program only requires one very basic stats course (I’m taking more advanced courses because I’m specializing in bioinformatics, everyone else is doing biology). I don’t know if the idea of pseudoreplication would even be brought up in that basic course

You’d hope that scientists absorb more stats knowledge from others during their training and career but if someone is surrounded by people who do the same thing it might just fall low on the list of things that they are motivated to question and verify for themselves

I guess this is how we end up with standardizing an entire discipline that aims to influence the lives of billions make such heavy use of mathematical tools that are meant to apply to quantitative data being abused on qualitative data, when most of the benefits of those tools don't apply and can wildly distort the results.

jnmaciuch

Senior Member (Voting Rights)

If a project is well funded and comprehensive enough there will usually be a biostatistician collaborator who does the analysis and (hopefully) knows to account for these things. But often when you have a smaller investigation like this study, its just going to be a grad student or post doc generating the data and learning how to run a few tests with a specific stats program. It would be up to their PI to check their analysis, but if the PI is a biologist who didn't spend much time on statistics, the student will end up with the impression that what they did is good enough. And there's often no requirements for any of the reviewers to have a strong stats background--if its a small experiment in a mid-tier journal that doesn't involve much sophisticated analysis, the reviewers will probably be chosen based on their familiarity with the biology.Considering how statistics are central to all medical research, this is really odd. Also it explains a lot. When we look at evidence-based medicine and how they abuse statistics, it might explain everything. They use the tools because they're told to use them, but don't really understand why they should use them.

V.R.T.

Senior Member (Voting Rights)

This is really frustrating.The shenanigans makes me less and less confident of the results they’re trying to make people confident of with their shenanigans…

Kitty

Senior Member (Voting Rights)

The incentive structures for sticking to the facts in a measured way seem far less albeit more worthy

I suppose the counter argument is that, if a group really thinks they've got something but still need to pin it down, they might have to produce something shiny every now and again to get the support they need to keep chasing it.

Which is kind of okay, as long as they're bright enough to know when it's time to pull the plug.

NelliePledge

Senior Member (Voting Rights)

for this group I think they usually have low numbers of participants so probably seems large to themI have no idea how difficult or expensive the test is, but boasting of a study being

'a multi-site large-scale investigation' when they only tested 36 pwME in 2 labs seems somewhat overblown.

John Mac

Senior Member (Voting Rights)

New research confirms people with ME/CFS have a consistent faulty cellular structure - Griffith News

A faulty ion channel function is a consistent biological feature of Myalgic Encephalomyelitis/Chronic Fatigue Syndrome (ME/CFS), providing long-awaited

chillier

Senior Member (Voting Rights)

- Their cohorts seem well matched, though their ME cohort has significantly lower white cell counts (p 0.005) and neutrophils (p 0.01) than the controls. I haven't seen that described in ME before? I think they saw some differences in leukocyte numbers in Beentjes' UK biobank study but not with the effect size this paper likely implies.

- they might be comparing cells rather than individuals when testing the ME and control groups making the n appear way higher than it really is. That's not good! (learnt from @forestglip on this thread that's called pseudoreplication, I didn't realise there was a name for that!)

- They show bar plots rather than the individual datapoint in a strip scatter plot!! I think we need to be able to see the scatter plots with the datapoints averaged for each invidual to really make a judgment of the results

- they might be comparing cells rather than individuals when testing the ME and control groups making the n appear way higher than it really is. That's not good! (learnt from @forestglip on this thread that's called pseudoreplication, I didn't realise there was a name for that!)

- They show bar plots rather than the individual datapoint in a strip scatter plot!! I think we need to be able to see the scatter plots with the datapoints averaged for each invidual to really make a judgment of the results

- they might be comparing cells rather than individuals when testing the ME and control groups making the n appear way higher than it really is. That's not good! (learnt from @forestglip on this thread that's called pseudoreplication, I didn't realise there was a name for that!)

It seems to be the same thing in many papers from this group. Disclaimer that I haven't read all these in detail so I might have missed something, but it looks to me like they all might be using tests which assume independence but are comparing multiple cells per person.

- Low-Dose naltrexone restored TRPM3 ion channel function in natural killer cells from long COVID patients, 2025, Sasso et al.

- Investigation into the restoration of TRPM3 ion channel activity in post-COVID-19 condition: a potential pharmacotherapeutic target, 2024, Sasso et al.

- Novel characterization of endogenous transient receptor potential melastatin 3 ion channels from Gulf War Illness participants, 2024, Marshall-Gradisnik et al.

- Altered TRPM7-Dependent Calcium Influx in Natural Killer Cells of Myalgic Encephalomyelitis/Chronic Fatigue Syndrome Patients, 2023, Preez et al.

- Impaired TRPM3-dependent calcium influx and restoration using Naltrexone in natural killer cells of myalgic encephalomyelitis/chronic fatigue syndrome patients, 2022, Eaton-Fitch et al.

- Transient receptor potential melastatin 3 dysfunction in post COVID-19 condition and myalgic encephalomyelitis/chronic fatigue syndrome patients, 2022, Sasso et al.

- The effect of IL-2 stimulation and treatment of TRPM3 on channel co-localisation with PIP2 and NK cell function in myalgic encephalomyelitis/chronic fatigue syndrome patients, 2021, Eaton-Fitch et al.

- Characterization of IL-2 Stimulation and TRPM7 Pharmacomodulation in NK Cell Cytotoxicity and Channel Co-Localization with PIP2 in Myalgic Encephalomyelitis/Chronic Fatigue Syndrome Patients, 2021, Preez et al.

- Potential Therapeutic Benefit of Low Dose Naltrexone in Myalgic Encephalomyelitis/Chronic Fatigue Syndrome: Role of Transient Receptor Potential Melastatin 3 Ion Channels in Pathophysiology and Treatment, 2021, Cabanas et al.

- Validation of impaired Transient Receptor Potential Melastatin 3 ion channel activity in natural killer cells from Chronic Fatigue Syndrome/ Myalgic Encephalomyelitis patients, 2019, Cabanas et al.

- Naltrexone Restores Impaired Transient Receptor Potential Melastatin 3 Ion Channel Function in Natural Killer Cells From Myalgic Encephalomyelitis/Chronic Fatigue Syndrome Patients, 2019, Cabanas et al.

For example, from the last paper listed, Cabanas 2019, it says they compared cells from ME/CFS and HC with Mann-Whitney. I don't see anywhere that it says they did something like average amplitudes from cells for each person.

outward ionic current amplitudes were significantly decreased after successive PregS stimulations in NK cells from ME/CFS patients in comparison to HC (Figures 2E–G)

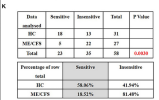

And while I'm not sure if the raw data is available to confirm that the Mann-Whitney p-value is based on cells, not people, it's at least possible to check with the Fisher's exact test results:

Eight ME/CFS patients and 8 age- and sex-matched healthy controls (HC) were recruited

In contrast, ionic currents evoked by both successive applications of PregS were mostly resistant to ononetin in isolated NK cells from ME/CFS patients (Figures 3E,F,H,J) in comparison with HC (Figures 3K,L) (p = 0.0030 and p = 0.0035).

I confirmed that the p=.003 when doing Fisher's exact on the numbers in the table. So it's based on 58 observations, even though there are only 16 participants.