- Home

- Forums

- ME/CFS and Long Covid news

- ME/CFS and Long Covid news

- Psychosomatic news - ME/CFS and Long Covid

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

SMILE trial data to be released

- Thread starter JohnTheJack

- Start date

JohnTheJack

Senior Member (Voting Rights)

@JohnTheJack: Do you know if the data on school attendance provided is that which allows to calculation of the trial's presepecified primary outcome (ie: that from school records, rather than just the participant's self-report)?

I was expecting there to be two sets of school attendance data, but can only see one.

Thanks for all your work on this.

I don't. I think others are better placed than I am to comment on the data.

You're welcome.

If there is a lot of missing data how robust can any conclusions be.

I suspect data protection rules may have stymied procuring official attendance records. When it comes to children data sharing can be extremely strict in education.

The study may have straddled timescale for recent data protection legislation being implemented?

I suspect data protection rules may have stymied procuring official attendance records. When it comes to children data sharing can be extremely strict in education.

The study may have straddled timescale for recent data protection legislation being implemented?

rvallee

Senior Member (Voting Rights)

Ah, see, it's missing the secret unlocking code found in Tarot cards.If there is a lot of missing data how robust can any conclusions be.

Thinking out loud but it seems that relying on a copyrighted self-help "treatment" means that it's all that more difficult to get hold of the training material since it could be actionable if it was published without permission. I don't think I've seen anything beyond some participants' descriptions. It's impossible to judge the value of a treatment without revealing any part of it. They can't keep it secret while promoting it as valid science.

Beyond the data, this is a self-help "treatment" created by a quack who claims he has healing hands and has people jumping on mats and doing affirmation rituals. Run in a trial, funded by famous universities. Published in a world-class medical journal. People jumping on mats and yelling at their symptoms.

It just feels like there are too many layers of insane to work out here and I don't really understand why this obvious pseudoscience could worm its way into actual medical research without objections raised at every damn step in the process.

Wonko

Senior Member (Voting Rights)

Have you heard of money?

Or one hand washing the other?

There's a network of these 'people', all supporting each other, and universities these days, run by businessmen.

They used to run 'carnivals', now they run universities, and fill them with whoever makes money, in the name of 'science'.

Or one hand washing the other?

There's a network of these 'people', all supporting each other, and universities these days, run by businessmen.

They used to run 'carnivals', now they run universities, and fill them with whoever makes money, in the name of 'science'.

If there is a lot of missing data how robust can any conclusions be.

I suspect data protection rules may have stymied procuring official attendance records. When it comes to children data sharing can be extremely strict in education.

The study may have straddled timescale for recent data protection legislation being implemented?

They used some imputation techniques to add in missing data but I think you need additional variables to be able to do this (things like age, sex, .... ) as you build a model of the relationships between the variables and use those to fill in missing values.

In there paper they did look at the sensitivity to using these techniques and I think if the quote intention to treat figures they use imputation (but not sure). I suspect someone like @Lucibee or @Sid could say more.

Esther12

Senior Member (Voting Rights)

I don't. I think others are better placed than I am to comment on the data.

From what I remember of the ruling, Bristol was making arguments about reidentification via school records, so at that point they seemed to realise that you were requesting the data for the school attendance records. Might they have tried to omit that from the release? Could you just check with them (or would that risk being treated as an entirely new FOI?)

edit: Actually, has anyone compared the school attendance results to those from the publish paper? Maybe these are the different figures? Sorry - I should have tried to do that before asking. I've forgotten most of the details of SMILE tbh.

Lucibee

Senior Member (Voting Rights)

They used some imputation techniques to add in missing data but I think you need additional variables to be able to do this (things like age, sex, .... ) as you build a model of the relationships between the variables and use those to fill in missing values.

In there paper they did look at the sensitivity to using these techniques and I think if the quote intention to treat figures they use imputation (but not sure). I suspect someone like @Lucibee or @Sid could say more.

They do say that they tested the sensitivity with and without the imputed data, but they don't say what the models/parameters for those imputed data were. That's important. If the imputed data are based on the data provided (ie, assuming data are missing at random), then of course they won't change the results if they include them. However, if the data were not missing at random (which is likely to be the case - missing data are highly likely to indicate a worse outcome), you still have to make assumptions about how those missing data might be presenting differently. But the paper doesn't tell us what those assumptions were. And they haven't included any imputed (outcome) data in the dataset they have provided.

Lucibee

Senior Member (Voting Rights)

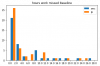

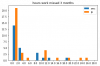

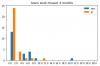

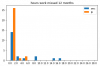

Like @Adrian I've had a look at the attendance data.

Before I saw the data (back in 2017), I thought that they should have looked at mean difference and not difference in means (same applies to PACE data imo). That bears out, especially as the data are so skewed. Individual diffs show a much more normal distribution, but would still benefit from baseline adjustment (I don't have the facilities to do this at the moment, nor to test stat sig).

I've shown graphs for the differences between baseline and 6 months and 12 months in SR school attendance, amalgamated to whole days (plus/minus 0.5-1, 1.5-2, 2.5-3 etc).

6 months: Mean diff for SMC is 0.28 days. Mean diff for LP+SMC is 0.81 days. Diff of diffs = 0.54 days

12 months: Mean diff for SMC is 0.79 days. Mean diff for LP+SMC is 1.57 days. Diff of diffs = 0.78 days

School attendance at 6 months was supposed to be a primary outcome measure. The SMILE trial paper only reports attendance at 12 months as a secondary endpoint. Make of that what you will.

Also note that 40% [correction, 25-30%] of the data are missing (in both groups) by the time we get to 12 months.

Before I saw the data (back in 2017), I thought that they should have looked at mean difference and not difference in means (same applies to PACE data imo). That bears out, especially as the data are so skewed. Individual diffs show a much more normal distribution, but would still benefit from baseline adjustment (I don't have the facilities to do this at the moment, nor to test stat sig).

I've shown graphs for the differences between baseline and 6 months and 12 months in SR school attendance, amalgamated to whole days (plus/minus 0.5-1, 1.5-2, 2.5-3 etc).

6 months: Mean diff for SMC is 0.28 days. Mean diff for LP+SMC is 0.81 days. Diff of diffs = 0.54 days

12 months: Mean diff for SMC is 0.79 days. Mean diff for LP+SMC is 1.57 days. Diff of diffs = 0.78 days

School attendance at 6 months was supposed to be a primary outcome measure. The SMILE trial paper only reports attendance at 12 months as a secondary endpoint. Make of that what you will.

Also note that 40% [correction, 25-30%] of the data are missing (in both groups) by the time we get to 12 months.

Last edited:

JohnTheJack

Senior Member (Voting Rights)

If there is a lot of missing data how robust can any conclusions be.

I suspect data protection rules may have stymied procuring official attendance records. When it comes to children data sharing can be extremely strict in education.

The study may have straddled timescale for recent data protection legislation being implemented?

No it was decided on the old law and the argument re children was dismissed.

arewenearlythereyet

Senior Member (Voting Rights)

so attendance difference infers that this is compared to a base figure? Do we have these numbers? Surely if the base attendance was 50% (say 95 days out of a potential 190 in a year then one day difference wouldn’t be significant ...sorry I may be being a bit simplistic but shouldn’t they be looking at the context of the difference? I’m just wondering what the basis of that calculation is? I can’t yet look at the data since my iPad doesn’t unzip files ...need to crank open a laptop.Like @Adrian I've had a look at the attendance data.

Before I saw the data (back in 2017), I thought that they should have looked at mean difference and not difference in means (same applies to PACE data imo). That bears out, especially as the data are so skewed. Individual diffs show a much more normal distribution, but would still benefit from baseline adjustment (I don't have the facilities to do this at the moment, nor to test stat sig).

I've shown graphs for the differences between baseline and 6 months and 12 months in SR school attendance, amalgamated to whole days (plus/minus 0.5-1, 1.5-2, 2.5-3 etc).

6 months: Mean diff for SMC is 0.28 days. Mean diff for LP+SMC is 0.81 days. Diff of diffs = 0.54 days

12 months: Mean diff for SMC is 0.79 days. Mean diff for LP+SMC is 1.57 days. Diff of diffs = 0.78 days

School attendance at 6 months was supposed to be a primary outcome measure. The SMILE trial paper only reports attendance at 12 months as a secondary endpoint. Make of that what you will.

Also note that 40% of the data are missing (in both groups) by the time we get to 12 months.

View attachment 7081 View attachment 7082

I think the 'days attendance' is days per week, so the possible range in the attendance data is 0-5. The difference that lucibee has graphed is then the difference for each child between their self reported weekly attendance at baseline and 6 months which has a range from -5 to +5.

And I realise I should have left that for Lucibee to answer. Sorry, fools rush in and all that.

And I realise I should have left that for Lucibee to answer. Sorry, fools rush in and all that.

Unable

Senior Member (Voting Rights)

So -5 and +5 are only possible where initial attendance is either full, or zero respectively!

Thus seeing only the change is weird because there are variable floor and ceiling effects for each individual!

In reality this forces most of the outcomes to the centre of the range.

Thus seeing only the change is weird because there are variable floor and ceiling effects for each individual!

In reality this forces most of the outcomes to the centre of the range.

I think the 'days attendance' is days per week, so the possible range in the attendance data is 0-5. The difference that lucibee has graphed is then the difference for each child between their self reported weekly attendance at baseline and 6 months which has a range from -5 to +5.

And I realise I should have left that for Lucibee to answer. Sorry, fools rush in and all that.

I think that is the case there is also an issue that this is school attendance for a week in the reporting period which is flexible which I think can add additional biases in terms of which week someone reports. I'm less likely to do stuff and certainly fill out forms when my daughter is less well.

arewenearlythereyet

Senior Member (Voting Rights)

No worries and thanks ...I’m sure she won’t mind. I’m still struggling to make head nor tail of what it says. I guess that laptop needs to come out and I’ll need to refresh my memory on her ‘methodology’ before trying to understand it?I think the 'days attendance' is days per week, so the possible range in the attendance data is 0-5. The difference that lucibee has graphed is then the difference for each child between their self reported weekly attendance at baseline and 6 months which has a range from -5 to +5.

And I realise I should have left that for Lucibee to answer. Sorry, fools rush in and all that.

Lucibee

Senior Member (Voting Rights)

The reason I looked at mean difference is because there are problems with the measures they chose to look at.

One of their original primary outcome measures was mean school attendance at 6 months. This was subsequently changed to a secondary outcome measure (that's another issue), and was reported in Table 3 as being 2.6 days vs 3.2 days (SMC vs SMC+LP) at 6 months and 3.1 vs 4.1 at 12 months. However, this is not a good way to describe the data, which is very skewed, particularly at 12 months. [eta: It also tells you nothing about actual improvement, which is surely the most important thing.] Although the differences between the means are similar to what you would obtain by looking at mean differences, the implications are different, because you are *not* looking at improvements, which are small (less than a day for each group).

Here are the graphs to show how skewed the data are at 6 and 12 months:

I hope you can see why use of means is inappropriate to summarise (and compare) these data, whereas for mean *difference* it is more appropriate.

Also, if you look closely at the data, particularly for those in the 5-day group at 12 months, most of those individuals had only increased their attendance by a day or so.

One of their original primary outcome measures was mean school attendance at 6 months. This was subsequently changed to a secondary outcome measure (that's another issue), and was reported in Table 3 as being 2.6 days vs 3.2 days (SMC vs SMC+LP) at 6 months and 3.1 vs 4.1 at 12 months. However, this is not a good way to describe the data, which is very skewed, particularly at 12 months. [eta: It also tells you nothing about actual improvement, which is surely the most important thing.] Although the differences between the means are similar to what you would obtain by looking at mean differences, the implications are different, because you are *not* looking at improvements, which are small (less than a day for each group).

Here are the graphs to show how skewed the data are at 6 and 12 months:

I hope you can see why use of means is inappropriate to summarise (and compare) these data, whereas for mean *difference* it is more appropriate.

Also, if you look closely at the data, particularly for those in the 5-day group at 12 months, most of those individuals had only increased their attendance by a day or so.

Last edited:

Lucibee

Senior Member (Voting Rights)

Actually, has anyone compared the school attendance results to those from the publish paper?

Baseline data certainly match (from Table 1).

Just checked means from Table 3 - all present and correct.

I think this is a very valid point - what is the attendance datum ( say for the previous 3-6 months) from which you are measuring. The interventions have to be set oin context to what is " normal" for these individuals.so attendance difference infers that this is compared to a base figure? Do we have these numbers? Surely if the base attendance was 50% (say 95 days out of a potential 190 in a year then one day difference wouldn’t be significant ...sorry I may be being a bit simplistic but shouldn’t they be looking at the context of the difference? I’m just wondering what the basis of that calculation is? I can’t yet look at the data since my iPad doesn’t unzip files ...need to crank open a laptop.

This age group is also the one with the best prognosis for natural recovery (and if they are attending school on a semi regular basis then they are mildly affected)- so is there a method for adjusting for this too?

Esther12

Senior Member (Voting Rights)

Baseline data certainly match (from Table 1).

Just checked means from Table 3 - all present and correct.

Thanks Lucibee - so that confirms that this is not the unpublished data from school records.

@JohnTheJack - it could be worth checking if this data was omitted by Bristol in error?

JohnTheJack

Senior Member (Voting Rights)

Thanks Lucibee - so that confirms that this is not the unpublished data from school records.

@JohnTheJack - it could be worth checking if this data was omitted by Bristol in error?

I'm still not clear that there is anything missing. I understood @Lucibee to be saying that all the data are there.

Perhaps you could say exactly what in terms of my request you think is missing.

I asked for:

Please provide the following patient-level data at baseline, 3 months, 6 months and 1 year assessments, where available.

1. SF-36 physical functioning scores.

2. School attendance in the previous week, collected as a percentage

(10, 20, 40, 60, 80 and 100 %).

3. Chalder Fatigue Scale scores.

4. Pain visual analogue scale scores.

5. HADS scores.

6. SCAS scores.

7. Work Productivity and Activity Impairment Questionnaire: General Health.

8. Health Resource Use Questionnaire.