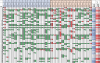

OK I'm trying to go through and look at whether eg two non-completions put off participants from picking hard (or something similar). I've not doing anything high-brow, just filters and hiding columns initially and then used simple conditional formatting

The first thing that struck me when I filtered by completion and hard was how few HVs had failed to complete hard tasks. Or to be more precise, how few hard tasks HVs had failed to complete.

Of the hard tests chosen, one HV failed to complete 6 'live' and 2 further prep (- numbers on excel sheet) tests, and all the rest of the HVs only failed to complete 5 'live' and 3 'prep' ones. Compared to over 100 non-completions from ME-CFS. It's pretty striking actually when you just chuck those filters on.

I then tried highlighting the last ones failed to complete and then removing that filter to just have 'hard selected' and see if there was any pattern where any participants obviously stopped choosing hard after that. So far that doesn't look like the case. In fact if you use conditional formatting on the two columns 'complete task yes or no' and 'reward yes or no' (I put this on because.. you know it is testing motivation so 'look what you could've won' vs 'was a no-win one anyway' was worth keeping an eye on) the difference between participants becomes quite striking.

HVA failed to complete 6 rounds early on (complete one, failed one, completed one, failed 5 hard ones, then completed the rest of the hard ones they selected) and then got it together and it's 'all green' (I conditionally formatted 'completing tasks' into green and red for failed) pretty much for them. They did 12 more hard ones after they got it together and seemed to be basing that on reward magnitude as they seemed to have selected enough low probability that didn't seem a discriminator for them.

Across the rest of the HVs you've then just got HVB failing 2 non-prep hard ones and E, N, O each failing to complete just one out of all the hard tasks they selected.

Then the variation within the ME-CFS is pretty stark 'groupings'. It's not what I thought though with people just giving up when they fail x amount of hard in a row.

The following 6 ME-CFS don't seem to have a 'completion issue'/ are relatively consistent with what you might see in some of the HVs. ME-CFS C, E, F (who seems to be sensibly picking high probability or 50:50 and high value for hard), J (but even more only high probability and the odd 50:50), K (same on high probability and 50:50 higher value), M (same with high probabilitity and high value 50:50)

N failed twice (having failed to complete once in the warm-up too), not in a row (there was a completion of one hard in between), but they then seemed to only pick high probability trials as 'hard' and completed them, choosing 15 hard in total - by comparison HV N only chose 13 hard but M 20 hard.

There is a group with 'a lot of red' from non-completion yet clearly continuing to choose hard after that of 4 ME-CFS. Excluding the 'prep' trials ME-CFS A failed to complete all but 2 of the 15 they chose hard for (similar strategic choices in those just a few clicks short), B only chose 9 hard and only complete 3 of those falling short by just one on a few so clearly a capability issue, D chooses hard loads despite failing nearly every time by significant amounts (clicks in the 70s and 80s) and is clearly determined to 'get there' finally managing two completions and two near-misses of one right at their end hard ones, H selects hard loads of times based on probability and value but fails to complete with clicks in the 80s

Then there are the 'in-betweeners' who to my eye are clearly being affected by capability issues in some way in the task just not to the extent of the group above.

L was 'OK' and selected hard a good bit early on, but failed 3 in the middle, 2 by quite a way (83, 85 clicks) by trial 27 and then only picked hard 3 more times (which they completed and were high probability, high value).

O looks like 'fatigue/fatiguability' too as after warm-up fails they then do 5 successful hard completions early on, one fail (96), two completions, one fail (97), one completion, three fails (96, 96, 97) then only selects hard 3 more times. It's not quite obvious/direct enough on whether the ones failed were 'sequential' but their first fail was doing hard for trial 9 (successful) and then 10 (just missed), then 13 and 15 they selected hard and completed and 17 they selected hard again and failed (97 clicks tho), 19 completed and then 20 another hard straight-after they failed (97) then 23, 24 hard selected and failed both (97,96) their next hard was trial 29 and they completed, failed 32 (97) and then completed 37.

So it's easy for me to look at and relate and think the person was 'borderline' ie their clicks when they missed were just a few off vs the group above who were often 10-20 clicks away+, given when they missed it was so close and my gut is that fatiguability is playing a part - but provability-wise the stats wouldn't be there etc.

G fails to complete hard ones 6 times in a row early (by just a few clicks) then selects hard 7 more times a bit more spaced out and completes 5/7 times.

I shows a similar pattern failing 4 hard early on (90-95), completing one, failing one (97), completing 2 then failing 1 (96) and then completing 4 more hards. Interesting to note here for 'I' that those two latter fails where when they had selected 2 trials as 'hard' in a row (26 they complete, 27 they fail; 36 they complete, 37 they fail).

It makes it quite hard to come up with an analytical strategy that would be a neat 'calculation' - unless you can think of some genius? But I think it is worth analysing at the descriptive level and noting the 'within group' variation is significant. As well as some of the inferences perhaps then being not there because 'fails to complete' could be coming from one sub-group (who seemed to perhaps to be desperate to give it a go and try and eventually get the odd win 'I will manage 98' - which sounds like me on some days in the past with my illness) where 'picking less hard' or other things from another etc. for the purposes I suspect of trying to get the best out of the body they are working with (also sounds like me on better days where I have had a little more in the capability-tank than blind-effort so had to use it wisely)