- Home

- Forums

- Other news and research

- Other news and research

- 'Conditions related to ME/CFS' news and research

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Vagus nerve-mediated neuroimmune modulation for rheumatoid arthritis: a pivotal randomized controlled trial 2025 Tesser et al

- Thread starter Jaybee00

- Start date

Jonathan Edwards

Senior Member (Voting Rights)

A 10% increment in ACR20 is pretty feeble. The real question is whether the blinding was in fact adequate (the unblinded phase gave higher rates of ACR20) or whether there is a modest biological effect from vagal stimulation. i am not going to hold my breath.

Utsikt

Senior Member (Voting Rights)

Fig. 2: Integrated neuromodulation system.

It was not much stimulation, only 1 minute daily:

The integrated neuromodulation system consists of an implant and pod. The implant is placed in the pod to position and hold it in place on the left cervical vagus nerve to ensure direct contact for precise stimulation. The implant is approximately 2.5 cm in length and weighs 2.6 g. To charge the implant, patients wear a wireless device (charger) around the neck for a few minutes, once a week. The implant is programmed by healthcare providers (HCPs) using a proprietary application (programmer).

It was not much stimulation, only 1 minute daily:

They were allowed to use other treatments after the 3 month blind period:The active stimulation intensity was set to an upper comfort level (maximum = 2.5 mA) and delivered a 1-min train of pulses to the vagus nerve once daily at 10 Hz16 (arm 1 = 1.8 mA average; arm 2 = 0 mA).

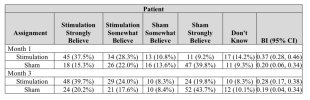

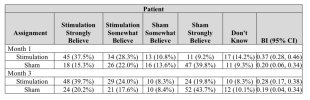

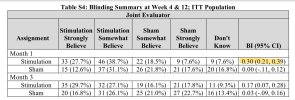

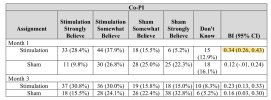

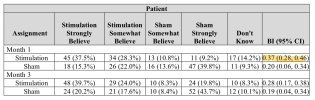

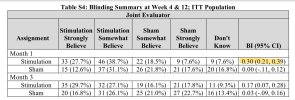

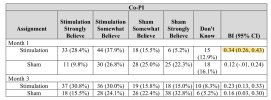

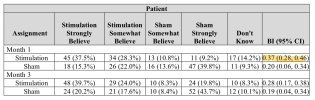

They used Bang’s blinding index to assess blinding. I’m not very familiar with the index, but a visual inspection of the data shows what I believe is a clear skew towards guessing correctly in both groups:Following the primary end point assessment at 3 months, all patients were eligible to continue in the study for open-label active stimulation treatment. Adjunctive pharmacological treatments (‘augmented therapy’) were permitted throughout the open-label stimulation period at the discretion of the rheumatologist in consultation with the patient, with 17.8%, 24.8% and 32.2% of patients receiving protocol-defined augmented therapy at 6, 9 and 12 months, respectively. At these timepoints, 88.0%, 80.6% and 75.2% of patients remained free from adjunctive b/tsDMARD therapy.

Yann04

Senior Member (Voting Rights)

This is good to see. I’m suprised the authors wrote “pivotal” in their title after this data + the open label data seems to clearly indicate that this trial is not enough to make out an effect or not.They used Bang’s blinding index to assess blinding. I’m not very familiar with the index, but a visual inspection of the data shows what I believe is a clear skew towards guessing correctly in both groups:

Jonathan Edwards

Senior Member (Voting Rights)

Utsikt

Senior Member (Voting Rights)

At this point I’ve stopped being surprised at the dishonesty in science.. Everything is propaganda unless proven otherwise.This is good to see. I’m suprised the authors wrote “pivotal” in their title after this data + the open label data seems to clearly indicate that this trial is not enough to make out an effect or not.

Yann04

Senior Member (Voting Rights)

Yes my “surprise” was rhetorical. A hedged and euphemised way to say that the authors seem to come to a conclusion explicitly not supported by their evidence.At this point I’ve stopped being surprised at the dishonesty in science.. Everything is propaganda unless proven otherwise.

Unfortunately outside this community I have to find ways to hedge and soften down my critiques because everyone seems to take being published in a paper as gospel. (I imagine if I had the energy of a healthy person I could be more outspoken but I never really have the energy to debate so I try not to provoke them ahah).

Utsikt

Senior Member (Voting Rights)

Sounds reasonable. I have the same experience! It’s incredibly frustrating..Yes my “surprise” was rhetorical. A hedged and euphemised way to say that the authors seem to come to a conclusion explicitly not supported by their evidence.

Unfortunately outside this community I have to find ways to hedge and soften down my critiques because everyone seems to take being published in a paper as gospel. (I imagine if I had the energy of a healthy person I could be more outspoken but I never really have the energy to debate so I try not to provoke them ahah).

They used Bang’s blinding index to assess blinding. I’m not very familiar with the index

Assessment of blinding in clinical trials (2004)

Success of blinding is a fundamental issue in many clinical trials. The validity of a trial may be questioned if this important assumption is violated. Although thousands of ostensibly double-blind trials are conducted annually and investigators acknowledge the importance of blinding, attempts to measure the effectiveness of blinding are rarely discussed. Several published papers proposed ways to evaluate the success of blinding, but none of the methods are commonly used or regarded as standard.

This paper investigates a new approach to assess the success of blinding in clinical trials. The blinding index proposed is scaled to an interval of −1 to 1, 1 being complete lack of blinding, 0 being consistent with perfect blinding and −1 indicating opposite guessing which may be related to unblinding. It has the ability to detect a relatively low degree of blinding, response bias and different behaviors in two arms.

The proposed method is applied to a clinical trial of cholesterol-lowering medication in a group of elderly people.

This paper investigates a new approach to assess the success of blinding in clinical trials. The blinding index proposed is scaled to an interval of −1 to 1, 1 being complete lack of blinding, 0 being consistent with perfect blinding and −1 indicating opposite guessing which may be related to unblinding. It has the ability to detect a relatively low degree of blinding, response bias and different behaviors in two arms.

The proposed method is applied to a clinical trial of cholesterol-lowering medication in a group of elderly people.

Web | Controlled Clinical Trials | Paywall

A simple blinding index for randomized controlled trials (2024)

Blinding is an essential part of many randomized controlled trials. However, its quality is usually not checked, and when it is, common measures are the James index and/or the Bang index. In the present paper we discuss these two indices, providing examples demonstrating their considerable weaknesses and limitations, and propose an alternative method for measuring blinding. We argue that this new approach has a number of advantages. We also provide an R-package for computing our blinding index.

Web | Contemporary Clinical Trials Communications | Open Access

rvallee

Senior Member (Voting Rights)

Oh, I don't know, I don't think fraud should be categorized as propaganda. There's lots of fraud, too. So much fraud.At this point I’ve stopped being surprised at the dishonesty in science.. Everything is propaganda unless proven otherwise.

Obviously completely unrelated to widespread loss of trust in experts. No, see, it's the TikToks, has to be! It's not as if blending pseudoscience and science could actually backfire, it's all well-meaning and so on and so forth.

Merged post

substack.com

substack.com

Might be of interest to @Jonathan Edwards

Eric Topol (@erictopol)

1 minute of vagus nerve stimulation per day was recently FDA approved as a treatment for rheumatoid arthritis. A new Ground Truths podcast with Prof Kevin Tracey who pioneered this field https://erictopol.substack.com/p/vagus-nerve-stimulation-and-the-immune

Might be of interest to @Jonathan Edwards

Last edited by a moderator:

ME/CFS Science Blog

Senior Member (Voting Rights)

Was thinking that if the treatment really worked it would probably also skew the results in the intervention group, with more people correctly identifying they were getting the treatment and not the inactive control.a visual inspection of the data shows what I believe is a clear skew towards guessing correctly in both groups:

This wouldn't explain why 40% in the sham group strongly believed they were in the sham group. But perhaps if the intervention didn't work at all, the proportion believing they were getting a sham in the intervention group would also be quite high and closer to 40%. In other words, I'm not sure if we should expect a random or equal distribution of guesses in the sham group.

Jonathan Edwards

Senior Member (Voting Rights)

Merged post

Eric Topol seems to be a science magpie. I think we looked at the studies on this and were underwhelmed.

Eric Topol seems to be a science magpie. I think we looked at the studies on this and were underwhelmed.

Last edited by a moderator:

Yes, it seems to me that people should have been asked what treatment they were on just a couple of days into the treatment. That would be more likely to pick out a problem in blinding. Assessing which treatment people believe they are getting even at one month would surely be confounded by an effective treatment, especially if word got around that some people were improving on the trial.Was thinking that if the treatment really worked it would probably also skew the results in the intervention group, with more people correctly identifying they were getting the treatment and not the inactive control.

I think it should have been possible for there to be effective blinding, what with the implanting of the stimulator.

My set point on vagus stimulation is that it is probably ineffective (anything that clips on an ear is almost certainly ineffective). But, I don't think those 1 month results necessarily show that blinding was inadequate.

(sorry, edited/added more)

Last edited:

Utsikt

Senior Member (Voting Rights)

If we go by JE’s assessment above, it looks like it didn’t really work, which strengthens the argument that the intervention effectively broke the blinding in the intervention group.Was thinking that if the treatment really worked it would probably also skew the results in the intervention group, with more people correctly identifying they were getting the treatment and not the inactive control.

Exactly. And it doesn’t seem like it worked in any particularly meaningful way, which would further strengthen the hypothesis that the blinding was broken by the intervention and not the effect of the intervention.But perhaps if the intervention didn't work at all, the proportion believing they were getting a sham in the intervention group would also be quite high and closer to 40%.

If you expect the intervention to work and take the lack of an effect to mean that you’re in the sham group, sure.In other words, I'm not sure if we should expect a random or equal distribution of guesses in the sham group.

But you could also say that the lack of an effect might be because the intervention didn’t work, and your guess would be 50/50 between sham and intervention in the sham group.

Alternatively, you could reason that the intervention group is less likely to not experience an effect (because sometimes the intervention actually works), so if your aim is to guess correctly the most times and you don’t experience an effect, you should guess that you’re in the sham group.

I agree with Hutan that an early assessment would have been good, ideally combined with a late assessment as well.

Utsikt

Senior Member (Voting Rights)

The authors are arguably hiding disadvantageous information:

The patient’s BI values were alsosubstantially higher than the values of evaluator and Co-PI, potentially indicating that the patients had more info about their allocation than the people involved in the trial. That would only be the case if the blinding was broken either by the device or the effect on their health.

For all three measurements, there were instances of the BI being =>0.3 at 1 month.Bang’s blinding index scores were <0.3 for patients, joint assessors and co-investigators, which indicated satisfactory blinding at the time of primary end point assessment (Supplementary Table 4).

The patient’s BI values were also

Last edited: