I was thinking about this yesterday. Looking at the entire sample of healthy volunteers and patients, people with success rates on hard tasks of above 90% chose hard tasks 44% of the time. People with success rates on hard tasks below 90% chose hard tasks 33% of the time. There is no difference when you go lower, ie there is no clear dose-response relationship where people getting dismal completion rates choose hard tasks even less, though this might emerge in a larger sample. And it's perfectly reasonable to suggest that just failing a bit is enough to affect your choices. Could those with more stats skills than I have see whether this alone could account for the group differences in hard vs easy task choice?

6/15 pts have success rates on hard tasks above 90%.

9/15 pts have success rates on hard tasks below 90%.

14/16 HVs have success rates on hard tasks above 90%.

2/16 HVs have success rates on hard tasks below 90%.

That 33% if pretty key when you are thinking about those with disability.

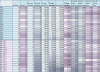

I did another table since yesterday out of curiosity on this (and also looking at button press and choice time at individual level as was thinking similarly to @Karen Kirke ) because if you are struggling to complete as per the test is supposed to be designed then you have two choices: do less to complete more of them, or fail more.

I think we can assume anyone doing less than 33% it's probably about not being able to rather than 'choice' in the inferred sense. And if you know you can't do (if we assume eg 40 tasks per person 40/3 = 13) 13 hard tasks, except you don't know how many will be coming up - certainly not at the start until you might get more of a sense, then you have to be more choosy at that point of when you do select hard. And that choice might include whether your finger is 'well rested' as well as probability and reward magnitude.

So I was just nosing at the data I plonked in a table and ordered by % hard completed to see if there were correlations with increased time for hard choices where people were 'doing less to complete a higher %'

There is a group a few up from the bottom in the ME-CFS where they generally had less than 33% hard selected and in general the ave hard choice time is a bit higher indicating they've a bit more to think about, and you can see that - in the column number of hard - relative to the rows above and below them they have selected fewer hards. For some of them this has translated to them completing more, for others they still only complete 3 of them anyway.

You can also see on the two near the bottom in the HVs: HVN and HVB that they had a lower % hard selected and when you look at their ave choice time for 'hard' vs ave choice time overall there is a bigger difference between these two times for those two than the rest of the HVs.

It's an imperfect pattern though - showing how many interacting factors are probably at play due to all of these complications. ME-CFS I has very quick decision times and only selected 23% hard. I'm guessing that ME-CFS A has relatively swift choice times compared to the rest of the 'group' around them on this table is down to their % hard at 31% being an indicator that they just picked the heuristic of going with 'only high probability' (which would mean 33% hard), thereby reducing the cognitive load and as far as their physical capability just going with the flow and letting the chips fall where they fell?

My point is that the issues, I think, do affect how the game is being played - but not unusually would do so in a way that isn't consistent across everyone.

So the capability problem means the data can't really be analysed because it has forced people into completely different 'games'. And because the instructions and set-up necessarily aren't set-up to accommodate that, you are going to have different strategies across individuals to cope with those issues (and because they are having to second-guess what the real point might have been of being faced with a task they couldn't do and whether it was to plough through because they were looking for resilience in the face of an impossible task)/

EDIT: table edited for colour @Evergreen let me know if this works. will keep the hex numbers if so.

Last edited: