IOW, a standard decay curve you would expect from no actual therapeutic effect and regression to the mean.The thing that surprises me is how poor the outcomes are at 3 month follow up. It looks like most of the so called improvement happens in the first 4 sessions - so still well within the first flush of gratitude that they are getting help and persuasion to see the questionnaires differently and declare themselves improved. And some may improve in that time from idiopathic CF if they are getting advice on things like sleep hygiene. After that, the so called improvement is very small.

- Home

- Forums

- ME/CFS and Long Covid research

- ME/CFS and Long Covid research

- Psychosomatic research - ME/CFS and Long Covid

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Cognitive Behavioural Therapy for chronic fatigue and CFS: outcomes from a specialist clinic in the UK (2020) Adamson, Wessely, Chalder

- Thread starter Esther12

- Start date

Snow Leopard

Senior Member (Voting Rights)

This is ridiculous, the more I look at it the more it's full of holes. They say 31% loss to follow up. That may be true overall, in that maybe 31% gave no follow up information, but:

CFQ 48.5% not followed up.

SF36 47.4% not followed up.

Indeed.

Generally speaking, if people were really doing much better and pleased, grateful for the therapy, there would be a much higher followup rate.

Publishing clinical data like this is only ever "suggestive" quality evidence, but I'm sure this will be cited as strong evidence for the acceptability, despite the fact that this is a self-selected (participation) group with a high dropout rate...

Sly Saint

Senior Member (Voting Rights)

not sure if this is applicable but I coincidentally was just reading this on CBT watchSo is there something dodgy going on with their comparison of means?

the success of Cognitive Behaviour Therapy (CBT) in low intensity outcome studies has been gauged solely in terms of a metric called effect size. The (within subject) effect size is calculated by subtracting the post treatment mean of a sample from the pre treatment mean and dividing by the spread of the results (the pooled standard deviation). [Alternately if there has been a comparison group in the CBT studies the means that are subtracted, are the post treatment means of each group, again divided by the standard deviation, to yield a between subjects effect size]. Assuming that a between subjects effect size has been calculated all this tells one is the size of the difference between the two groups, it does not tell you whether everyone improved a little, or some greatly improved whilst some did very poorly.

http://www.cbtwatch.com/whats-the-o...y-old-self-with-this-psychological-treatment/

Barry

Senior Member (Voting Rights)

"missing 25% or less"?If a patient was missing 25% or less of the data from any questionnaire, a prorated score was calculated (this used the mean of the remaining items from the same individual to calculate a prorated score).

How was an individual's data processed if more than 25% was missing? Especially if it was missing because the individual was performing badly.

Barry

Senior Member (Voting Rights)

Partial evidence is on a par with partial truth. Low-quality-evidence by omission is on a par with untruthfulness by omission. If you omit significant evidence or truth, you can strongly imply falsehoods. It's why in a court of law you swear to tell the whole truth.CFQ

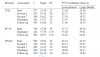

Start: 977 patients, mean CFQ 24.19

Session 4: 392 patients, mean CFQ 19.45

Follow-up: 503 patients, mean CFQ 18.60

SF-36

Start: 768 patients, mean 47.6

Follow-up 404 patients, mean 58.51

So is there something dodgy going on with their comparison of means? The initial figures are calculated on close to double the number of patients as the final figures, and they say themselves the ones lost to follow up had worse initial scores. So most or all of the 'improvement' in the averages could be simply down to the sickest patients no longer being included.

To take a simple example, if you have 10 patients, half of whom score 5 points on a scale and half score 10 points, the mean at the start is 7.5. If all the ones scoring 5 points drop out, and the rest don't change their scores, the mean at the end is 10. Nobody has increased their score, but the means have improved from 7.5 to 10. Magic.

Surely they couldn't have done such a trick could they? What am I missing Ms Chalder or Mr Wessely if you're reading this? I think you need to show your working.

RuthT

Senior Member (Voting Rights)

Interesting. I know this service. In this time frame. Poor CBT delivered by one of their ‘top‘ people who trains others. I didn’t submit a complaint at the time, just withdrew with my GP consent due to terrible note taking and significant fundamental factual inaccuracies made in a basic report indicating poor listening skills and also misuse of ‘drop down’ menus.

Jonathan Edwards

Senior Member (Voting Rights)

I am trying to catch up with threads for there days.

Just to note that there are no NICE criteria for diagnosis of CfS. Just criteria for when to consider the diagnosis.

Just to note that there are no NICE criteria for diagnosis of CfS. Just criteria for when to consider the diagnosis.

rvallee

Senior Member (Voting Rights)

So while Wessely was abusing his influence to have a puff piece published to an international audience loudly screaming they were being silenced and had to abandon research in whatever they think CFS is, he was working on this. Which, to be fair, is actually accurate because this is not research but technically massively hypocritical. Wessely did the same in the early 2000's with a round of airing of grievances about leaving the field even as he was actually working on PACE. The lies are so blatant.

Even worse is that this strictly used self-reported symptoms, which the BPS ideology considers to be worthless when it reports harm, illness or deterioration, yet somehow are valid when but only in their limited set of questions that miss 90% of the symptoms. What incredible jerks these people are.

Even worse is that this strictly used self-reported symptoms, which the BPS ideology considers to be worthless when it reports harm, illness or deterioration, yet somehow are valid when but only in their limited set of questions that miss 90% of the symptoms. What incredible jerks these people are.

A SF-36 of 60 is the average physical function of 80 year-olds in the general population. Similar standard deviation, even. Now that it's been normalized that they can use that absurd threshold of recovery, they simply use it again, because of course. Which means that whatever journal they publish this in has vetted and agrees that 20 year-olds with the physical function of 80 year-olds are perfectly fit and healthy. What a sick, cruel joke these people are.SF-36 significantly improving over treatment; start of treatment M = 47.81 (SD=25.74); end of treatment M = 59.56 (SD=27.3).

Aside from the SF-36, which is non-specific, none of those scores are of any relevance to ME/CFS.Patients were assessed throughout their treatment using self-report measures including the Chalder Fatigue Scale, Short Form Health Survey (SF-36), Hospital Anxiety and Depression Scale (HADS), Global Improvement and Satisfaction

As Trish said this makes no sense whatsoever, Oxford criteria are the loosest ones. WTH is this nonsense? Again showing that peer review in clinical psychology is entirely over style and ignores substance.According to self-reported accounts of their symptoms, 754 (76%) participants met Oxford criteria for CFS and 518 (52%) met CDC criteria for CFS.

Jonathan Edwards

Senior Member (Voting Rights)

Patients fatigue, physical functioning and social adjustment all significantly improved following CBT for CFS in a naturalistic outpatient setting. These findings support the growing evidence from previous RCTs and suggests that CBT could be an effective treatment in routine treatment settings.

This is the 'pragmatic trial' nonsense - doing trials in 'naturalistic settings'.

Worth noting that 'naturalistic' actually means 'to give the impression or appearance of reality'. Actual reality is called 'natural'. It seems that the BPS sorority continue to find ways to malaprop the language of philosophy.

This is the 'pragmatic trial' nonsense - doing trials in 'naturalistic settings'.

Worth noting that 'naturalistic' actually means 'to give the impression or appearance of reality'. Actual reality is called 'natural'. It seems that the BPS sorority continue to find ways to malaprop the language of philosophy.

This is such crap--the "growing evidence from previous RCTs." What growing evidence? Each study shows the opposite of what they claim it shows.These findings support the growing evidence from previous RCTs

Snowdrop

Senior Member (Voting Rights)

So they have toted out the captain of the titanic. While the conclusions are all optimism this seems to me like striking up the band. Someone got a glimpse of the iceberg and is trying to steer the behemoth away from the dangerous reality before them. Back to delusion land.

Stay tuned. Let's see if they make it safe to harbour this time. Or will covid-19n be the reality that breeches their impenetrable (sk) hulls.

Stay tuned. Let's see if they make it safe to harbour this time. Or will covid-19n be the reality that breeches their impenetrable (sk) hulls.

Jonathan Edwards

Senior Member (Voting Rights)

This is such crap--the "growing evidence from previous RCTs." What growing evidence? Each study shows the opposite of what they claim it shows.

And what about the next bit?

suggests that CBT could be an effective treatment in routine treatment settings.

This is quite a climbdown from 1989 when it was assumed that CBT was an effective treatment in routine settings that just needed a couple more trials...

wigglethemouse

Senior Member (Voting Rights)

Can I ask a dumb question? If there is such a huge dropout how can they get away with such blatant lies in the results section of the Abstract?This is ridiculous, the more I look at it the more it's full of holes. They say 31% loss to follow up. That may be true overall, in that maybe 31% gave no follow up information, but:

CFQ 48.5% not followed up.

SF36 47.4% not followed up.

If only 52% of patients were followed up, how can 90% of them be satisfied at follow-up?Results

Patients fatigue, physical functioning and social adjustment scores significantly improved over the duration of treatment with medium to large effect sizes (|d|=0.45 – 0.91). Furthermore 85% of patients self-reported that they felt an improvement in their fatigue at follow-up and 90% were satisfied with their treatment. None of the regression models convincingly predicted improvement in outcomes with the best model being (R2 =0.137).

Ahh found it. They made up the results to get the follow-up numbers in the results section

[EDIT - the following quite only applies to "Predictors of outcome" section which showed there were no predictors]

For those patients without end of treatment scores, the nearest follow-up score was used to compute an end point and was defined as a computed follow-up score.

Okay. Let's look into drop out some more. Note - , the study goes back to 2002!!! Notice they say a 31% dropout.

Drop-out was defined as those patients who did not complete any questionnaires at the end of treatment, or any follow-up and was 31% in this naturalistic setting. Reason for drop-out was only recorded since 2007 and therefore we have no data from 2002 to 2007.

But as @Trish highlighted about 48% of patients were not followed-up in any one of the questionaires.

So let me summarise.

1. Reading the abstract, data was available for 995 patients and 85% felt the treatment showed an improvement. That sounds good.

2. Quick look at the meat and potatoes

- They used Oxford and CDC criteria that don't required PEM a key no-no in any modern CFS paper, and even then only 754 (76%) participants met Oxford criteria for CFS and 518 (52%) met CDC criteria for CFS. So even the loosest of critera only about 50% of those starting CBT actually had CFS

- 48% dropped out of answering some questionnaires, but only 31% dropped out of answering all questionnaires,

- They "made up the answers" for dropouts which was about half the participant number and then presented final results as if all had participated.

Do they even discuss how the CBT treatment changed over those 14 years and what versions of questionnaires were used when?

Okay, I know I repeated others statements, but I had to verify them for myself as they are quite outrageous. It's the abstract and analysis in the paper that is outrageous.

EDIT : I'm surprised that they didn't present a subsection of analysis on only patients that met the CDC criteria? That implies that the results for that are not as stunning!

Last edited:

Okay, I know I repeated others statements, but I had to verify them for myself as they are quite outrageous. It's the abstract and analysis in the paper that is outrageous.

very helpful summary. Since this is Trudie Chalder of "back-to-normal" fame, I guess it's possible to find a way to claim success no matter how awful the results.

rvallee

Senior Member (Voting Rights)

The evidence that it's possible to get away with total BS as long as it comes "from a BPS perspective"? Hard to dispute that. They can even actually use the words randomized control (sic?) trial even though they haven't actually done a single actual controlled trial. And they get away with it, nobody's stopping them.This is such crap--the "growing evidence from previous RCTs." What growing evidence? Each study shows the opposite of what they claim it shows.

It's actually remarkable and pretty much unique in all the fields of science to build hundreds of careers entirely out of pure bullshit pseudoscience. I mean seriously this is impressive in some ways. These people turned con artistry into con science, it's no small achievement, while being celebrated for it, no less.

They can even get away with making bullshit statements about getting away with bullshit pseudoscience and get that published. This genuinely deserves a medal made out of the crushed bones of their victims, it's no small feat to destroy millions of lives on purpose while actually being awarded for it BY THE MEDICAL PROFESSION. No one can touch that level of destructive psychopathy, these people truly are the worst people to have ever practiced a serious profession. There is definitely growing evidence of that.

rvallee

Senior Member (Voting Rights)

Ah! Nice one. I remember they did the same with PACE on some of the data for dropouts.If only 52% of patients were followed up, how can 90% of them be satisfied at follow-up?

Ahh found it. They made up the results to get the follow-up numbers in the results section

Enforcement is 9/10 of the law. Same is true in any system of rules, once flaunting the rules is normalized for some they will do nothing but keep flaunting them. This whole thing where people are exempted from the rules? Yeah, it always leads to the same outcome: disaster. Well, disaster for many, knighthoods and unlimited funding for a few.

rvallee

Senior Member (Voting Rights)

Uhhhhhhh.Okay. Let's look into drop out some more. Note - , the study goes back to 2002!!! Notice they say a 31% dropout.

Data was available for 995 patients receiving CBT for CFS at an outpatient clinic in the UK.

OK found it, this is a 14-year retrospective study:Participants were referred consecutively to a specialist unit for Chronic Fatigue or CFS.

So if we take at face value, this goes back to 2006, they blame ~30% of dropouts on data not being available before 2007, meaning those dropouts occurred between 2006-2007? Or the data are available but are simply buried in this BS excuse. Why would they even be included then? This excuse makes no sense!We retrospectively analysed data collected during the previous 14 years of the clinic

Yet scored an average of less than 60 on the SF-36. Bald-faced lies. How do they get away with lying all the damn time?! These people are sadists, they ruin lives and then lie, lie, LIE.At 18-months to five years, a third of participants were not severely fatigued and almost three quarters had good levels of physical functioning

Note the keywords:

Evidence based practice < Clinical, Psychotherapy < Psychiatry < Clinical, Somatoform disorders < Psychiatry < Clinical, Mood disorders (including depression) < Psychiatry < Clinical, Other psychiatry < Psychiatry < Clinical

Last edited:

Dr Carrot

Senior Member (Voting Rights)

I was personally seen at this clinic. It took 12+ months to get an appointment - once I did I had an assessment, and after the assessment it was a further 12 months for a first appointment. The ‘therapy’ was just talking about increasing activity and addressing sleep (why didn’t I think of that??) and I found myself repeating the same thing week after week so I ended up discharging myself. The therapist was pleasant but the protocol was completely ridiculous.

wigglethemouse

Senior Member (Voting Rights)

Data from 2002 to 2018 from patient visits 2002-2016So if we take at face value, this goes back to 2006

This naturalistic study used data retrospectively from patients who were seen in the unit between August 2002 and August 2016. Data was collected from August 2002 to February 2018 inclusive, to include follow up appointments.