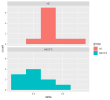

I think they considered whether it might be due to increased variance, and found no general difference in variance between groups. Specifically for changes due to exercise, this would be the Delta plot in S3A below.I think it is incompatible with the data just showing much more variance in ME/CFS than the control group

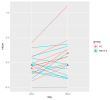

The plots you posted above might show increased variance in ME/CFS, but this is just a small selection of compounds, chosen essentially in part because of their significance in the control group, which could be due in part to low variance.

Since the following indicates that the variance doesn't differ much on the whole, you might expect to see a similar number of significant findings in ME/CFS as well if they were similar.

The fact that there are metabolite changes in the controls and not in the patients is not due to increased variation in metabolite levels in the ME/CFS patients compared to the controls. There is no trend toward higher standard deviation in the ME/CFS group when comparing the standard deviations for ME/CFS to controls for each compound (Supplementary Figure S3).