So looking at the meta charts comparing studies, I was starting to think more and more that a 2-day CPET simply measures deconditioning, given the latest, possibly best matched control group for "fitness" showed the largest decrease in controls as well. And a couple of the other "sedentary" control groups also showed decreases.

So I wanted to see if there's a correlation between means of baseline VO2peak and AT workload for each study. VO2peak is supposedly a decent metric of physical fitness or deconditioning. So if the studies with control groups with the lowest VO2 at baseline showed the largest day to day decreases in workload, I'd think that's a clue that it's just about deconditioning.

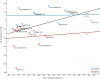

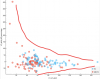

Here are all the study points. The same studies from the meta charts I made earlier.

Blue dots, healthy controls, is what I'm interested in. ME/CFS, sure I expect a correlation. The hypothesis is if they're more sick, they both are more deconditioned from being sedentary (lower VO2) and the whole CPET hypothesis is they have larger decreases in workload from PEM. The large correlation of the combined phenotypes also makes sense, since most of the ME/CFS are clustered in the bottom left corner for the reason just given, and the HCs are more spread out along the top.

There does not seem to be a strong correlation for controls. I checked, and both features for the HC groups pass Shapiro-Wilk normality test (ME/CFS workload difference does not).

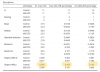

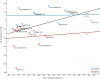

So I think I can use Pearson's correlation for just the studies' control means:

Just in case, here's Spearman correlation on the same metrics:

No correlation for either difference metric.

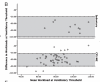

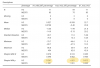

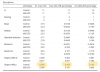

I posted this before, but here are the correlations again for individual participants in the Keller study:

In this case, for controls, the distribution for baseline VO2peak and VO2peak difference did not pass normality, so I'm not sure the r values above apply.

So I did Spearman correlation with this one:

Again no correlation in controls.

So I don't see any indication that fitness defined by baseline VO2peak has anything to do with decreases in performance on workload at AT or VO2peak on day 2.

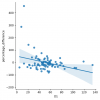

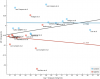

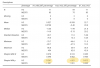

And just for completeness, here are the scatter plots for baseline VO2peak vs VO2peak difference for all studies and for Keller individuals:

All studies:

Keller 2024:

Keller 2024:

Interestingly, the charts look like the more fit you are, the worse you do on the 2-day CPET in terms of peak VO2 for all groups, though none are significant.