I totally wasted too much time on this, but I found out you can make animations in MatPlotLib, so I had to try this. I animated that graph of day 1 workload vs change in workload from a few posts up and show how it changes if you normalize the change value based on the day 1 value. Basically making the regression lines horizontal.

I didn't even write any of the code myself, it was pretty much 100% AI, but it still took over 5 hours of telling it to tweak things to get it to work correctly.

It's a GIF that might be kind of a large file size (~2 MB) for people with slow internet, so click the thumbnail if you want to view it.

Anyway, the important part, the statistics, took a few minutes.

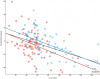

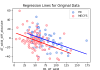

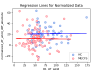

How I normalized the values: First I got the slopes of the two regression lines for ME/CFS and HC from the plot above of D1_AT_wkld vs AT_wkld_diff_absolute. Since the lines for the groups are independently calculated, and yet pretty much parallel, I think there's a good chance they represent close to the true real world slope of how day 1 workload affects how much your workload will change on the next test, at least for fairly sedentary people, which both groups were. Since they're not exactly the same slope, I got the average of the two, which came out to about -0.3274.

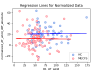

To normalize the change value, I subtracted (-0.3274*D1_wkld) from the change. This makes it so that the regression lines are just about horizontal, so that individuals with different day 1 workloads can now be compared since they aren't sloping towards greater decreases if they start with higher values, which healthy controls were.

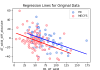

Here's the original and the normalized data with the regression lines, same as in the GIF:

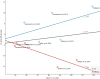

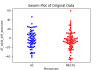

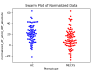

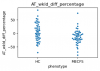

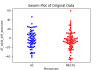

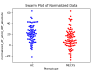

And here are the groups side by side before and after normalization:

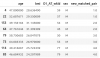

And now the statistical tests. Using normalized change, I get p values less than 0.001. Cohen's d effect size is 0.535.

Again the equation for normalization is:

Code:

normalized_AT_wkld_diff_absolute = AT_wkld_diff_absolute - (-0.3274*D1_AT_wkld)

And that -0.3274 number is just the slope of the line halfway between the blue and red line above.

To try to explain it in simpler terms, there appears to be a trend where the higher the workload on day one of these participants, the more they decreased on day two. The same trend in both groups: for every one watt higher they worked on day one before hitting anaerobic threshold, they, on average, decreased about 0.33 watts more on the next day.

So now imagine you have a person with ME/CFS that gets 50 watts on day one and decreases by 5 points the next day, and a healthy person that gets 70 watts on day one and decreases by 7 points. It looks like the healthy person decreased more than the person with ME.

But wait. The healthy person, just on account of the trend we saw previously, is expected to decrease 0.33 more for every watt higher they started than the other participant. So they started 70 - 50 = 20 watts higher on day one. 20 * 0.33 = 6.6 watts. They would be expected to decrease 6.6 watts more than the other person. But they only decreased 2 watts more. Which suggests that either the healthy person isn't decreasing as much as you would expect, or the person with ME/CFS is decreasing more.

What the normalization equation above is doing is simply subtracting the extra "change" watts that participants are expected to decrease just from starting out higher. Put them on level footing so we can compare the difference in watts changed that is actually interesting. So with the example of the two people above, you would subtract 0.33 times the number of watts they started at from how much they changed:

ME: 50*0.33 watts subtracted from 5 = increase of 11.5 watts.

HC: 70*0.33 watts subtracted from 7 = increase of 16.1 watts

The actual increase values by themselves aren't important, it's comparing between them. We could subtract both by a constant like 16.1 watts if we assume the healthy person's value is normal to get the numbers looking prettier.

Anyway, what we see is that if we don't count the extra watts a person will decrease just from starting higher, the example person with ME decreased 4.6 watts more (or increased 4.6 watts less) than expected for some reason.

So that's basically what I see in the real study data above. When I take away those extra free "change watts" from every participant, which is what's happening with skewing the graphs, the ME participants decreased 9 watts more than the controls on average. (You can see the means of the two groups using normalized data in the statistical test screenshot above.)

Of course, there might not be a real correlation. Or there is, but the real slope of the real world effect is different from what the slope of the study's data looks like, which is about 0.33, and what I'm basing this on.

I only think it's a real effect, as well as close to 0.33, because both groups individually have that same slope (or very near it), and the correlation is significant in both groups.

So the upshot is that I think, based on these results, if you compared two groups with similar workloads on day one, you would see a significantly greater decrease in the ME group. Or if you used this normalization equation for dissimilar groups, you would still see a greater decrease in the ME group.

----

Edit: Slopes and confidence intervals for the two groups:

ME/CFS: -0.338, 95% CI [-0.510, -0.165]

Controls: -0.317, 95% CI [-0.457, -0.177]