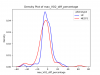

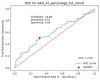

With these 4 included, I found a cohen_d of 0.008 and p_value of 0.93. With them excluded, things change quite dramatically. The effect sizes goes to d =0.44 and the p-value = 0.000151.

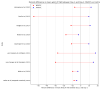

That looks like a reasonable difference. Also tried scipy.stats.mannwhitneyu on the full sample and got a p-value of 0.00503.

I'm getting the same cohen d value for with and without the outliers, but different t-test and mann-whitney values.

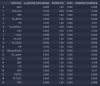

I highlighted the results for workload absolute difference at AT because it matches the t-test and cohen d values from your first calculations.

With outliers included:

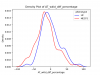

Outliers removed:

That's with Jamovi. Same results with Python on the full dataset:

Code:

mecfs_df = df[df['phenotype'] == 'MECFS']

hc_df = df[df['phenotype'] == 'HC']

es = cohend(mecfs_df['AT_wkld_diff_percentage'], hc_df['AT_wkld_diff_percentage'])

U1, mw_p_val = mannwhitneyu(mecfs_df['AT_wkld_diff_percentage'], hc_df['AT_wkld_diff_percentage'])

t_stat, tt_p_val = ttest_ind(mecfs_df['AT_wkld_diff_percentage'], hc_df['AT_wkld_diff_percentage'], equal_var=False)# MECFS: 84

# HC: 71

Cohen's D: -0.00812

Mann-Whitney p-value: 0.04783

Welch's t-test: 0.95745

With PI-026 removed (but outliers included), the Mann-Whitney p-value goes from 0.048 to 0.044: (note the second metric is different from above)

Last edited: