Simon M

Senior Member (Voting Rights)

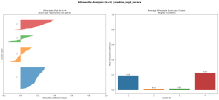

Does the study say how many individuals had at least one of these 115 risk genes? I'm still concerned that the number of genes is much too high, given the evidence we have on heritability.

We know that some of the inherited risk is through the genetic signals identified by DecodeME , and these are likely to be 90% non-coding, so different from these. So perhaps we can expect 10% accounted for by coding Variants. Hence my interest in the number of individuals this study identified as having risk variants here.

We know that some of the inherited risk is through the genetic signals identified by DecodeME , and these are likely to be 90% non-coding, so different from these. So perhaps we can expect 10% accounted for by coding Variants. Hence my interest in the number of individuals this study identified as having risk variants here.